Merck + Furhat Robotics

The world’s first pre-screening robot

Imagine a robot who could help passersby discover their risk factors for diabetes, hypothyroidism and alcoholism. Then imagine how on earth you would go about building it. Together with Merck & Furhat Robotics, that’s exactly what we did. And in doing so, created an entirely new diagnostic technology for healthcare.

In Brief

- A Furhat robot able to diagnose patients for several diseases.

- Created new workflows and processes to develop voice interfaces interactively.

- Became a masterclass in how to codify human-behavior.

- Overcame limitations of speech-synthesis and voice recognition technologies.

Tech stack

Furhat Robotics 2nd Generation Robot & SDK• Kotlin• React• Amazon Polly Text-to-speech• Google Speech Recognition

Sustainable development goals

How it began

We trained for this

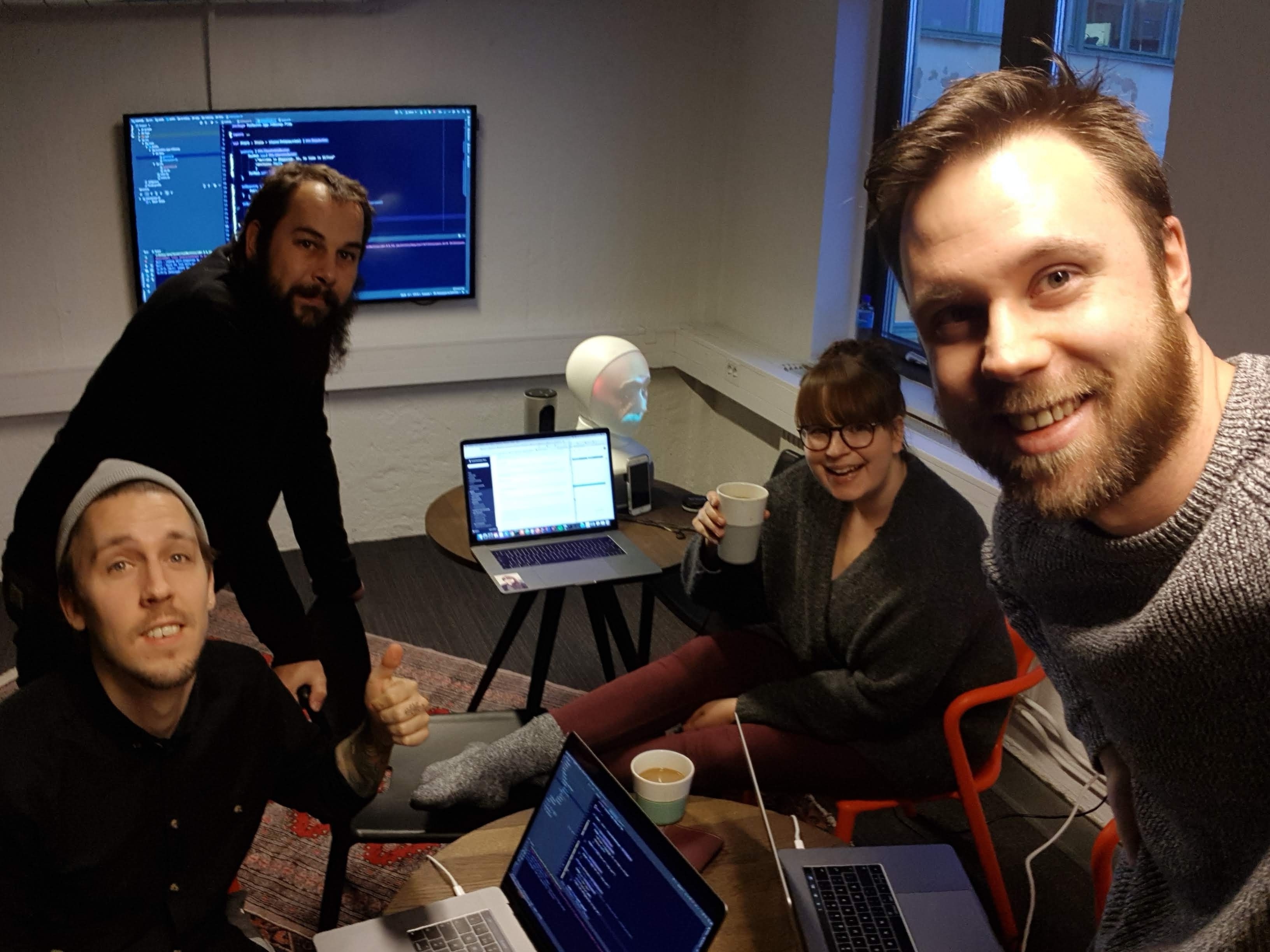

Early 2018, Pontus Österberg borrowed (a then prototype) robot from startup Furhat Robotics. The futuristic face came along to our Prototyping Bootcamp where we started to tinker and experiment with it. By the end of the week, we’d created a one-card draw game with the robot, where to win, you had to uncover the robot’s tell. The Furhat team loved the creativity behind the technical solution and invited us to come along to one of their hackathons. And so began our beautiful friendship with Furhat Robotics and their robots.

Forward thinking pharma

Fast-forward a little, we meet Merck. They had been imagining a new use for the Furhat robot, as a diagnostic nurse. Their vision was ambitious. Diagnose three different conditions (diabetes, hypothyroidism and alcoholism) and ensure the robot had a great bedside manner to match. And it needed to be ready in three months for a showcase. We had already hacked together two skills for the robot and become the leading experts in the Furhat platform. We thought we knew what we were getting ourselves into. Oh, were we wrong.

"We were convinced that, despite the uncertainty, Furhat & Prototyp would be able to help us find a solution to increase awareness and diagnosis of these diseases" - Petra Sandholm, Merck

The team

Petra Sandholm

Merck

Gustav Aspengren

Merck

Björn Helgeson

Tech Lead, Prototyp

Yoav Luft

Developer, Prototyp

Joe Mendelson

Project Coordinator, Furhat Robotics

Gabriel Skantze

Dialog System Advisor, Furhat Robotics

The unknowns

A blank screen

There are no best-practices of how to build a voice controlled robot. In fact there is basically no guidance out there at all. And here-in lay the biggest challenge. We had an idea of how to go about the project. To plan out the diagnostic question flows and code them. But whether that would work in practice was yet to be seen.

"You can't just Google how to build a voice-controlled robot!" - Björn Helgeson, Tech Lead

We needed to implement the right diagnostic techniques, ones which could be converted from detailed questionnaires into casual conversions. And then there was the bedside manner. To code the robot to not only understand the multitude of possible answers to questions, but to also have a natural style of interaction that felt human. Oh, and we were building it all in Kotlin, a version of Java that is used by the Furhat Robotics SDK.

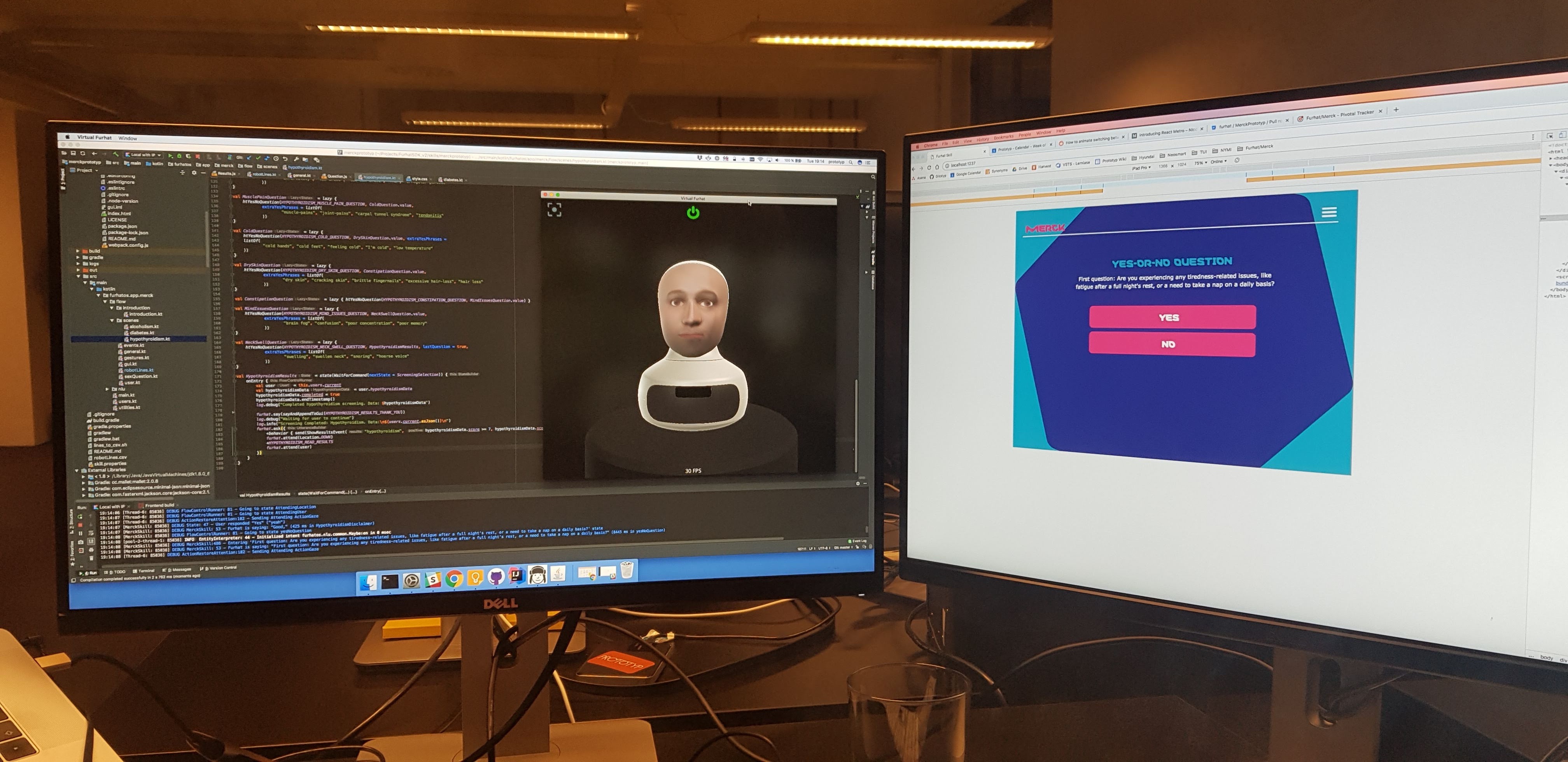

The first demo

The first prototype we demo’d for the team integrated one diagnosis flow with speech-synthesis and speech recognition. The robot was setup in a test room. The Furhat team gave the robot a run for its money and it did well. It was able to converse through a diagnosis of diabetes and gently recommend a next step. Success!

Unfortunately, when the Merck team went in and did the same, the robot failed. We hadn’t counted on their charming Swenglish accents confusing the robot. Just another dimension we had to cater for when it came to making the robot human.

A bit disheartened but much emboldened, we pressed on. The fail highlighted the most difficult part of the project, making a robot’s conversation skills smart enough to naturally interact with people.

"It was incredible to see the robot sitting there, conversing with a patient. While it didn't all go according to plan, we could see the vision coming to life." - Petra Sandholm, Merck

Pivots & perspectives

We had to rethink rather a lot during these three months. Our workflow changed entirely, from designing the interactions and then building them, to an intensely iterative process where we were designing and building in tandem.

What makes us human?

Ever noticed all those subtle things we humans do in conversation? Us neither, until now. You know, like averting your gaze, ummming & erghing and micro-pausing. It’s these details that make a conversation feel natural, positive and helpful. All of a sudden we found ourselves coding psychology and behavioural principles. Something we could not have done without the expert help from KTH professor Gabriel Skantze.

"We were combining so many different disciplines, we were no longer just engineering software, we were hacking human behaviour." - Yoav Luft, Developer

Testing testing 1,2,3

Tried and tested user-testing methods for visual interfaces didn’t work. Each time we iteratively coded fixes based on user test fails, we caused new problems for the next testers. We found ourselves in a vicious feedback loop, which we eventually broke free off through sheer will.

Influencing the Furhat platform

The Furhat platform was still very young and in development and we worked together with the Furhat team so that they were able to enable our work. We discovered that the platform needed to be extended and enhanced to be able to support some features such as side-ways glancing which is a very common human action. We also developed the ability to log and aggregate data, to report on how many patients were referred on for further checkups.

Finished product

Merck wanted to be first with AI, robots and pharmaceuticals. And so it was. On Tuesday 27th of November, PETRA was born.

Able to greet and converse naturally with a passerby. Able to gently diagnose them for three conditions and refer them to help if needed.

Built as a standalone robot application, PETRA is able to handle multiple users, a convincing set of facial gestures as well as gaze behaviour. We also built a graphical user interface (made in React) so to aid those who aren’t entirely comfortable interacting with a robot.

PETRA’s first appearance was at the tech hub Epicenter in Stockholm. In her two weeks there she diagnosed many people who would not have even thought to have this conversation with their doctor, nevermind a robot.

Now you’ll find PETRA travelling around Europe, inspiring the industry to push new technology to find creative solutions to our health issues. And who knows where she’ll pop-up in the future? Maybe at your local pharmacy.

Key takeaways

Codifying human behaviour

How many ways can you answer yes to a question? Yes, yep, yeah, yaha, absolutely, yaas...any more? These are just a few. PETRA can understand virtually an endless number of possibilities. This sums up the complexity of this project. One that is truly built on the details.

"It was probably the most difficult project I've worked on because it was multi-disciplinary." - Björn Helgeson, Tech Lead

PETRA was an incredibly rewarding project. Born from experimentation at our Prototyping Bootcamps. Utilising every skill we had and requiring so much more from us. She truly pushed us to break new ground.

Food waste start-up Matsmart were beefing up their ecommerce site and were faced with tough tech choices. Sound familiar?

We use cookies to give you a better experience when visiting our website. Read more about how we handle cookies